- Welcome to CodeWalrus.

Recent posts

#1

Randomness / Re: I gut lunix and i got 6 vi...

Last post by Dream of Omnimaga - Yesterday at 08:52:42 PMtest

#2

MTVMG/Music 2000 series talk & showcase / Re: Dream of Omnimaga's music ...

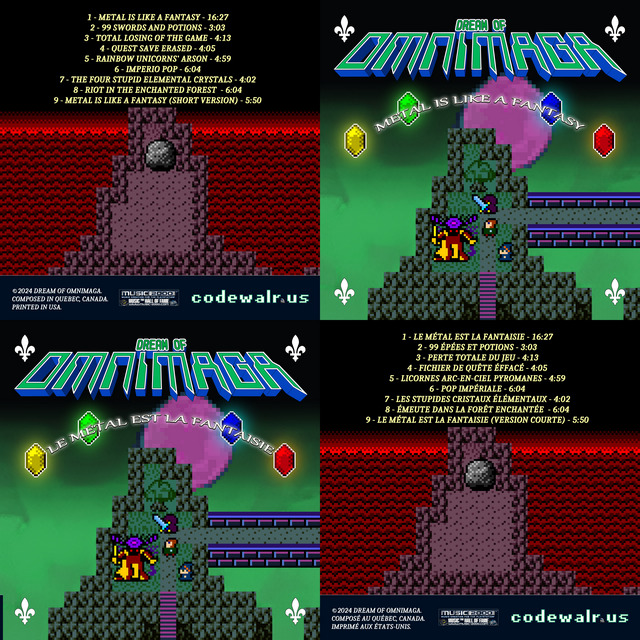

Last post by Dream of Omnimaga - April 19, 2024, 02:03:42 AMThe release of the electronic game metal music album "Metal is Like a Fantasy" was made earlier than planned at 12 AM France time instead of Quebec time due to a violent glow-in-the-dark unicorns storm that just hit Quebec City. I managed to end the invasion rapidly by releasing the album at that different time zone to end their madness. Here is the link to all stores on which it's available:

https://music.youtube.com/playlist?list=OLAK5uy_nubkt-52M8bHFk0FvILExSqNuRbAnRLEE

https://djomnimaga.bandcamp.com/album/metal-is-like-a-fantasy

https://mirlo.space/dream-of-omnimaga/release/metal-is-like-a-fantasy

https://jam.coop/artists/dream-of-omnimaga/albums/metal-is-like-a-fantasy

https://artcore.com/release/76041/metal-is-like-a-fantasy

https://music.apple.com/gb/album/metal-is-like-a-fantasy/1741187988

https://music.amazon.ca/albums/B0D1NPKGF2

https://www.qobuz.com/ca-en/album/metal-is-like-a-fantasy-dream-of-omnimaga/gqji4bjocwrna

https://tidal.com/browse/album/357290038

https://www.deezer.com/us/album/573117591

https://web.napster.com/album/alb.4501713057584730

① Metal is Like a Fantasy

Epic metal inspired track inspired by "Blind Guardian" and "Rhapsody of Fire", featuring remixes of "Be my Best", "Firestorm", "Hardcore is Like a Fantasy", "Maximum Liquidation" and "Capture of the Far East Desert".

② 99 Swords and Potions

This one is about old JRPG games where you could hold unrealistically high amount of items and equipment in your otherwise tiny backpack as you traveled from one dungeon to another. It shows even more the shift towards the electronic and gaming side of the music more than the other tracks and for some reasons it sounds reminiscent of some "Jean-Jacques Goldman" material from France.

③ Total Losing of The Game

Among all the tracks on this album this is probably the closest to my typical electronic metal style. Inspired by "Dream of Omnimaga" and "Of Hope and Success", this track about arcade games is a remix of both "Super Ultimate Mega Doom" and "Scream" combined together into one feels-good melodic metal track.

④ Quest Save Erased

This track was inspired from a recent "AVGN" episode where the Nerd loses his "Final Fantasy VI" save data after a game crash and is about the unstable nature of old game and software save mediums. It is also a remix of my UK hardcore tracks "Rave Maelstrom".

⑤ Rainbow Unicorns' Arson

This one tries to have a name that is over the top and it's a electronic power metal remix of my old tracks "The Winter Trance", "The Summer Trance" and "Techno Strike". This tracks introduce low piano bass notes to the overall style.

⑥ Imperio Pop

Inspired from pop music (especially the 80's), this track ended up sounding a bit reminiscent of "Heavenly" band stuff in its electronic power metal form.

⑦ The Four Stupid Elemental Crystals

This track is about generic role-playing games from the late 80's and early 90's where all you had to do is search for the four elemental crystals to save the world and now having to search for them yet again.

⑧ Riot in the Enchanted Forest

This track is a follow-up to the previous one and adds the extra sound heard in "Imperio Pop". It is inspired by Nightwish to a certain extent.

⑨ Metal is Like a Fantasy (Short version)

It is a remix of my track "Hardcore is Like a Fantasy" into electronic power metal , but it has a little more synth effects than my 2011-2023 tracks of this genre.

https://music.youtube.com/playlist?list=OLAK5uy_nubkt-52M8bHFk0FvILExSqNuRbAnRLEE

https://djomnimaga.bandcamp.com/album/metal-is-like-a-fantasy

https://mirlo.space/dream-of-omnimaga/release/metal-is-like-a-fantasy

https://jam.coop/artists/dream-of-omnimaga/albums/metal-is-like-a-fantasy

https://artcore.com/release/76041/metal-is-like-a-fantasy

https://music.apple.com/gb/album/metal-is-like-a-fantasy/1741187988

https://music.amazon.ca/albums/B0D1NPKGF2

https://www.qobuz.com/ca-en/album/metal-is-like-a-fantasy-dream-of-omnimaga/gqji4bjocwrna

https://tidal.com/browse/album/357290038

https://www.deezer.com/us/album/573117591

https://web.napster.com/album/alb.4501713057584730

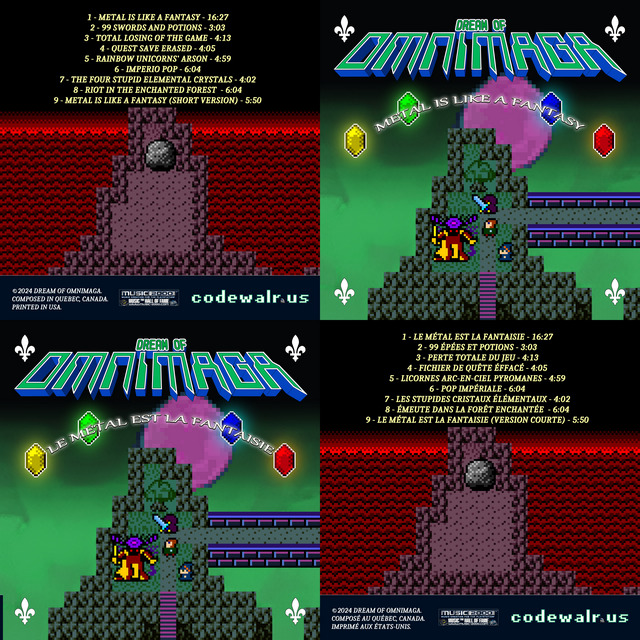

① Metal is Like a Fantasy

Epic metal inspired track inspired by "Blind Guardian" and "Rhapsody of Fire", featuring remixes of "Be my Best", "Firestorm", "Hardcore is Like a Fantasy", "Maximum Liquidation" and "Capture of the Far East Desert".

② 99 Swords and Potions

This one is about old JRPG games where you could hold unrealistically high amount of items and equipment in your otherwise tiny backpack as you traveled from one dungeon to another. It shows even more the shift towards the electronic and gaming side of the music more than the other tracks and for some reasons it sounds reminiscent of some "Jean-Jacques Goldman" material from France.

③ Total Losing of The Game

Among all the tracks on this album this is probably the closest to my typical electronic metal style. Inspired by "Dream of Omnimaga" and "Of Hope and Success", this track about arcade games is a remix of both "Super Ultimate Mega Doom" and "Scream" combined together into one feels-good melodic metal track.

④ Quest Save Erased

This track was inspired from a recent "AVGN" episode where the Nerd loses his "Final Fantasy VI" save data after a game crash and is about the unstable nature of old game and software save mediums. It is also a remix of my UK hardcore tracks "Rave Maelstrom".

⑤ Rainbow Unicorns' Arson

This one tries to have a name that is over the top and it's a electronic power metal remix of my old tracks "The Winter Trance", "The Summer Trance" and "Techno Strike". This tracks introduce low piano bass notes to the overall style.

⑥ Imperio Pop

Inspired from pop music (especially the 80's), this track ended up sounding a bit reminiscent of "Heavenly" band stuff in its electronic power metal form.

⑦ The Four Stupid Elemental Crystals

This track is about generic role-playing games from the late 80's and early 90's where all you had to do is search for the four elemental crystals to save the world and now having to search for them yet again.

⑧ Riot in the Enchanted Forest

This track is a follow-up to the previous one and adds the extra sound heard in "Imperio Pop". It is inspired by Nightwish to a certain extent.

⑨ Metal is Like a Fantasy (Short version)

It is a remix of my track "Hardcore is Like a Fantasy" into electronic power metal , but it has a little more synth effects than my 2011-2023 tracks of this genre.

#3

Site News & Announcements / Re: CodeWalrus turns 9 year ol...

Last post by Dream of Omnimaga - April 01, 2024, 02:54:35 PMNew theme

#4

Hardware / Re: Pi84 - The Raspberry Pi po...

Last post by Dream of Omnimaga - March 31, 2024, 01:19:21 AMHeya, since DarkestEx was last active in 2021, it's possible that he won't see your messages.

#5

Hardware / Re: Pi84 - The Raspberry Pi po...

Last post by ayoub - March 31, 2024, 01:08:13 AM@DarkestEx

Hi there, i really need this ti83 pi calc or ti84 pi. Can i buy it please? How much? You noted some are for sale.

If you still making them i really need your help, i want to buy

Hi there, i really need this ti83 pi calc or ti84 pi. Can i buy it please? How much? You noted some are for sale.

If you still making them i really need your help, i want to buy

#6

[Completed] CodeWalrus Tools (Web/Android/PC) / Re: CodeWalr.us Post Notifier ...

Last post by ayoub - March 30, 2024, 11:54:13 PMHi darkest ex

i want to buy a ti83 plus pi calculator

i want to buy a ti83 plus pi calculator

#7

Hardware / Re: Pi84 - The Raspberry Pi po...

Last post by ayoub - March 30, 2024, 11:51:32 PMHi @DarkestEx

I really want to buy a ti83 plus pi.

Do the keys works, how much are you charging??

I really want to buy a ti83 plus pi.

Do the keys works, how much are you charging??

#8

Site News & Announcements / Fourmz fixed

Last post by Dream of Omnimaga - March 24, 2024, 10:41:14 PMForums have been fixed. Nothing really wrong was going on but it has been forever since we last did a SMF reinstall with all the mods reinstalled. There was also something on the board index that made the page slow to load, which has been fixed.

#9

Hardware / Re: Pi84 - The Raspberry Pi po...

Last post by opka_coders_ dsa - March 12, 2024, 10:33:37 PMHi there,

You have a really creative and awesome calculator

Is it possible to buy your calculator for Ti84 with the Pi-inside it fully working

, I would love to buy it. I will pay for it.

Also does it have access to online, like can I for example use symbolab or play games on it

Kind regards

You have a really creative and awesome calculator

Is it possible to buy your calculator for Ti84 with the Pi-inside it fully working

, I would love to buy it. I will pay for it.

Also does it have access to online, like can I for example use symbolab or play games on it

Kind regards

#10

Music Showcase & Production with other softwares / Re: Not a Number: Absolution (...

Last post by Dream of Omnimaga - March 11, 2024, 02:02:16 AMI like this! This sounds quite melodic so it's quite my cup of tea. I should go check your Bandcamp tomorrow to listen to your older stuff again.

Powered by EzPortal